In an era where organizations are drowning in data despite the rise in customer data platforms use, it’s tempting to believe that the path to sharper customer understanding is simply acquiring more of it. New feeds, new tools, new sources—more inputs should mean better insights. But as the rapid rise of general-purpose AI tools reshapes how teams collect and interpret information, a quieter, more pervasive problem is emerging: accuracy is slipping even as data volume grows.

The question facing organizations today isn’t whether they need more data. It’s whether they can trust the data—and AI-generated insights—they already have. Let’s explore the topic below.

Customer data integration isn’t the problem — understanding it is

Many organizations assume the answer to stronger customer understanding is more data. They subscribe to additional feeds, adopt more monitoring tools, and pull from a growing list of public sources. But the reality is more complicated. The rise of general-purpose AI tools has changed how teams find and interpret information, and not always for the better.

A growing number of innovation leaders, product marketers, and insights teams now rely on Gen AI assistants such as ChatGPT, Copilot, Gemini, and Perplexity to gather competitive and market intelligence. These tools scrape massive volumes of publicly available information from news sites, social media, company pages, online forums, and more. Many organizations even build their own lightweight agents that generate real-time alerts, summarize competitor moves, or automatically refresh battle cards and internal deliverables that feed Go-to-Market (GTM) alignment.

At first glance, it feels like a shortcut to better intelligence. But this approach introduces a significant problem that most teams do not recognize until it is too late: distorted or incomplete data.

A recent study from the European Broadcasting Union and the BBC found that general-purpose AI tools distort news content nearly half the time, with 45% percent of the articles evaluated containing hallucinated details, outdated or mismatched information, misinterpreted facts, or incorrect sourcing. The full study explains that these tools frequently provide confident but unverifiable statements, omit essential context, and blend real information with fabricated elements that appear plausible enough to go unnoticed.

This creates a silent risk inside organizations. If a team uses these tools to shape competitive assessments, strategic briefs, feature prioritization, investment cases, or messaging decisions, they may be acting on incomplete or inaccurate intelligence without realizing it. The outputs feel polished and authoritative, but the source trail is thin or missing. There is no easy way to confirm whether an insight is fully accurate, partially distorted, or completely incorrect.

For high-stakes decisions, this becomes more than a research flaw. It becomes an operational liability.

The gap between collecting data and interpreting it for generic customer data integration tools

General-purpose AI can be extremely useful for early-stage exploration, rapid summarization, or generating directional hypotheses. But without clear attribution, trusted data sourcing, or built-in validation methods, these tools cannot be treated as reliable systems of record for market or competitive intelligence. They were not designed with the governance, evidence standards, or domain-specific understanding required for enterprise decision-making.

This is why so many organizations experience growing friction inside their insights workflows. They have more data than ever, yet the confidence in that data keeps dropping. They collect huge amounts of information, yet they lack a single place where trusted, verified, and context-rich insights are connected and ready for use. As a result:

- Teams struggle to distinguish between accurate data and distorted data

- Research becomes harder to trace back to a reliable source

- Decisions take longer because verification becomes a manual burden

- Leaders question whether they can trust the intelligence presented to them

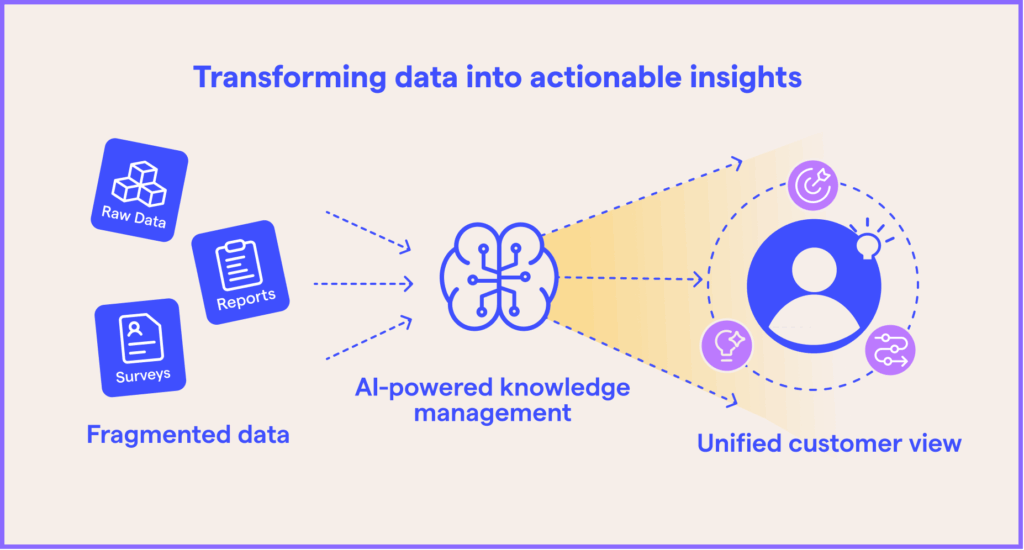

The core issue is not a lack of data. It is the lack of structure, validation, and integration needed to turn data into dependable knowledge. The reliance on general-purpose AI tools amplifies this problem by rapidly generating outputs that cannot always be traced or verified.

Traditional and generic customer data integration tools reach their limits quickly

Many teams try to solve fragmentation by adding generic integration tools such as spreadsheets, basic connectors, file-syncing utilities, or ETL platforms. While these options can move data from one system to another, they are not built to understand the meaning behind the customer or market information.

Generic customer data integration tools struggle with:

Data relationships: Customer records connect to feedback, research findings, behavioral analytics, purchase history, and demographic information. Traditional tools move these fields but do not preserve contextual links.

Semantic consistency: A single concept may appear under multiple labels. Tools that do not understand business-specific terminology cannot align these variations without significant manual effort.

Research methodologies: Market and consumer research relies on structured and unstructured data that often requires context to interpret. Generic pipelines treat all inputs as simple tables and fail to carry methodology, confidence level, sample frame, or time horizon forward.

Governance and trust: Without end-to-end visibility, teams cannot easily confirm whether a dataset is recent, verified, or complete. Leaders grow hesitant to rely on analytics that have unclear origins.

This creates a pattern where integration appears technically successful, but teams still cannot use the information effectively. The data is technically accessible, but not truly integrated.

There is, however, another approach. A category of purpose-built competitive and market intelligence platforms exists specifically to address these challenges. These systems prioritize high-quality data sources, transparent attribution, citation-ready evidence, and the ability to work with proprietary research that generic AI models cannot access. They surface insights with far stronger accuracy because they ground their outputs in validated, traceable information.

The difference is not subtle. When an organization uses trusted, integrated, and well-governed data, the intelligence that emerges becomes significantly more reliable. Teams waste less time validating facts and more time applying insight, while leadership gains confidence that strategic decisions are grounded in real, non-distorted signals.

To unlock value, organizations need tools that understand customer and market data at a deeper level.

This is where a strong data integration strategy begins. Not with acquiring more data, but with ensuring that what you already have is connected, validated, and easy for teams to use without second guessing its accuracy.

Many organizations see measurable improvements once they adopt a more modern integration strategy. Teams can answer business questions faster, collaborate more effectively, and pursue opportunities with greater confidence. Leaders gain clarity about what is happening in the market and why.

The shift is not about technology alone. It is about making knowledge universally accessible rather than locked in disconnected pockets.

Building a strong data integration strategy that is ready for scale

A successful customer data integration strategy is not defined by how many systems it connects but by how reliably teams can use the information afterward. An effective strategy includes the following principles:

Starting with trusted data: The best integration process is one that adapts to your existing sources rather than forcing everything into a rigid, one-size-fits-all model. Tools should respect your current structures, not erase them.

Prioritizing semantic understanding: An integration layer must understand what the data represents, not just where it resides. This means aligning definitions, linking related concepts, and preserving methodology.

Ensuring trust and data governance from the start: Access control, versioning, and lineage tracking protect the integrity of insights. Consistent governance encourages teams to trust the information they use.

Support for multiple structured and unstructured data types: Modern customer understanding requires surveys, CRM signals, sales performance, third-party reports, transcripts, trend data, and behavioral analytics. A strong integration strategy must bring all these together without losing fidelity.

Focusing on accurate interpretation, not just aggregation: Bringing data into one place is only the first step. Teams also need clarity on how to interpret it correctly. The recent EBU and BBC analysis found that large language models often misinterpret information even when the underlying facts exist, leading to summaries that omit context or rearrange details in misleading ways. The report noted that nearly a third of evaluated AI outputs contained framing or tonal inconsistencies that altered the meaning of the original content. This underscores the importance of an integration layer that preserves context, methodology, and source metadata so teams do not misread or misapply insights.

Enabling activation, not storage: The end goal is not a larger archive. It is a system that reduces search time, speeds up decision-making, and creates a foundation for AI-driven insight generation.

Many organizations see measurable improvements once they adopt a more modern integration strategy. Teams can answer business questions faster, collaborate more effectively, and pursue opportunities with greater confidence. Leaders gain clarity about what is happening in the market and why.

The shift is not about technology alone. It is about making knowledge universally accessible rather than locked in disconnected pockets.

Better data integration and better insights lead to better decisions — via the right customer data platform

Gathering more data is not the answer to better understanding — and thus, better decisions. What teams truly need is a system that connects and correctly interprets what they already have, at scale. When insights, research, and customer data can work together, organizations unlock speed, accuracy, and strategic clarity.

A specialized and purpose-built AI for insights platform like DeepSights supports this shift by centralizing knowledge, applying AI to interpret it, and presenting insights with reliable evidence. It gives teams confidence that the information they are using is relevant, complete, and supported by the full breadth of the organization’s market knowledge.

Better integration leads to better judgment, stronger innovation, and more effective customer strategies. And it all starts with giving your data a structure that makes sense for how your business works.

Market Logic can bring you transformative customer data integration capabilities in one comprehensive platform that drive better decision-making for business growth, with award-winning solution DeepSights. This centralized knowledge management system is designed to enhance the way your teams capture and analyze data, turning it into actionable insights. Request a demo today to learn more.