Artificial intelligence (AI) is rapidly reshaping how organizations collect, process, and leverage market research, offering unprecedented opportunities for generating deep, actionable insights. However, this transformative power comes with a critical challenge: the potential for AI bias. If not carefully managed, the AI technology that powers certain knowledge management systems—including AI in healthcare knowledge management—can inherit or develop biases that skew results, leading to flaws in market analysis and ultimately, misguided business decisions. This can significantly undermine the value of market research investments.

As organizations increasingly rely on AI for critical insights generation and decision-making, the risks associated with biased and inaccurate data are escalating. This can result in significant competitive disadvantage, flawed strategic decisions, misallocated resources, and missed market opportunities. Therefore, ensuring your AI-powered knowledge management system (KMS) delivers accurate and evidence-based insights is non-negotiable for success.

In this article, we’ll explore the consequences of AI bias in market research knowledge management (KM), examine its causes, and provide practical strategies for mitigation. We’ll also demonstrate how purpose-built Retrieval-Augmented Generation (RAG) solutions enable insights integrity and deliver insights grounded in reliable evidence.

Understanding AI bias in knowledge management

AI bias in knowledge management refers to systematic errors or unfair preferences within AI algorithms that distort how information is collected, organized, categorized, or presented to users. These biases can manifest through preferential treatment of certain data types, methodologies, or information sources, affecting the system’s ability to provide balanced, comprehensive knowledge.

The common sources of AI bias in knowledge management systems typically fall into three key categories:

- Data collection and representation: When training data for Machine Learning (ML) algorithms contains historical biases, lacks diversity, or over-represents certain data points, the resulting AI systems will likely perpetuate these imbalances. Knowledge management systems trained on limited or skewed data sets fail to capture the full spectrum of relevant information and insights from your organization’s substantial market research.

- Algorithm design and training: The choices made during algorithm development, including which features to prioritize, how to weigh different inputs, and what success metrics to optimize for, can introduce implicit biases into the system. Even well-intentioned developers may inadvertently encode their personal biases into AI processes through these technical decisions, affecting how your organization’s valuable market research is interpreted within your chosen knowledge management system.

- User interactions and feedback: As users engage with knowledge management systems, their queries, selections, and feedback create continuous feedback loops that can amplify existing biases. Systems designed to personalize content based on user behavior might create filter bubbles that limit exposure to diverse data points within your organization’s knowledge base, further entrenching biased viewpoints.

Data collection, algorithm design, and user interactions all contribute to potential biases within AI-driven knowledge management. These sources underscore the inherent complexity of ensuring fairness and accuracy, particularly when left unchecked.

The consequences of AI bias in knowledge management

Unaddressed AI bias, especially within enterprise knowledge management systems, can have far-reaching implications for organizations that depend heavily on AI technology for their informed strategic initiatives.

Some AI bias examples include a system disproportionately recommending products to male users based on historical sales data, a report underrepresenting the market potential of a demographic group due to biased training data, or a customer feedback analysis failing to recognize nuanced criticism from non-standard language use. These instances can lead to skewed insights, unfair outcomes, and damaged brand reputation.

Understanding these potential impacts is essential for protecting your significant investment in market research:

- Skewed insights and inaccurate reporting: Biased knowledge systems provide distorted views of available market research, leading to flawed analysis and recommendations. For example, AI might mistake individual customer quotes or questionnaire statements for representative data, misinterpret sample compositions, or fail to differentiate between study background and actual research findings. This results in strategic miscalculations and missed opportunities, especially when biases exclude relevant data or methodologies.

- Unfair or discriminatory outcomes: Knowledge management systems that exhibit bias can perpetuate or even amplify existing inequities within internal organizational processes and decision frameworks. This systematic skewing may manifest in how market segments are prioritized, how research resources are allocated, or how product development efforts are directed based on incomplete or biased patterns identified by the system.

- Damage to brand reputation: As awareness of algorithmic biases grows, stakeholders increasingly expect organizations to ensure fairness in their technology applications. Companies whose knowledge systems produce biased results risk undermining the credibility of their market research, potentially wasting the substantial investments made in data collection and analysis.

Ultimately, the consequences of AI bias within knowledge management pose a significant threat to an organization’s strategic foundation. From skewed insights that lead to flawed decisions, to unfair outcomes that damage internal processes, and reputational harm that erodes stakeholder trust, the risks are substantial. Therefore, proactively addressing and mitigating these biases is not merely a technical concern, but a strategic imperative for any organization relying on AI-driven knowledge.

Identifying AI bias within your knowledge management system

Detecting bias within an AI-driven knowledge management system requires both systematic evaluation and critical analysis of the system’s outputs and performance. While many AI-driven systems offer bias detection tools, it’s important to note that not all knowledge management systems are equally equipped, necessitating a careful human evaluation of individual platforms.

The key indicators of AI bias can appear in several forms. Here’s what to look for:

Disproportionate representation of certain groups

When reviewing knowledge management outputs — search results, reports, data insights, etc. — examine whether certain market segments, geographical regions, or business units receive disproportionately high or low representation. This imbalance often manifests through over-emphasis on majority data points while alternative viewpoints receive limited coverage or depth. Look for patterns in how your system categorizes and prioritizes information related to different business segments, as these patterns often reveal underlying biases in how knowledge is structured and surfaced within your organization’s knowledge base.

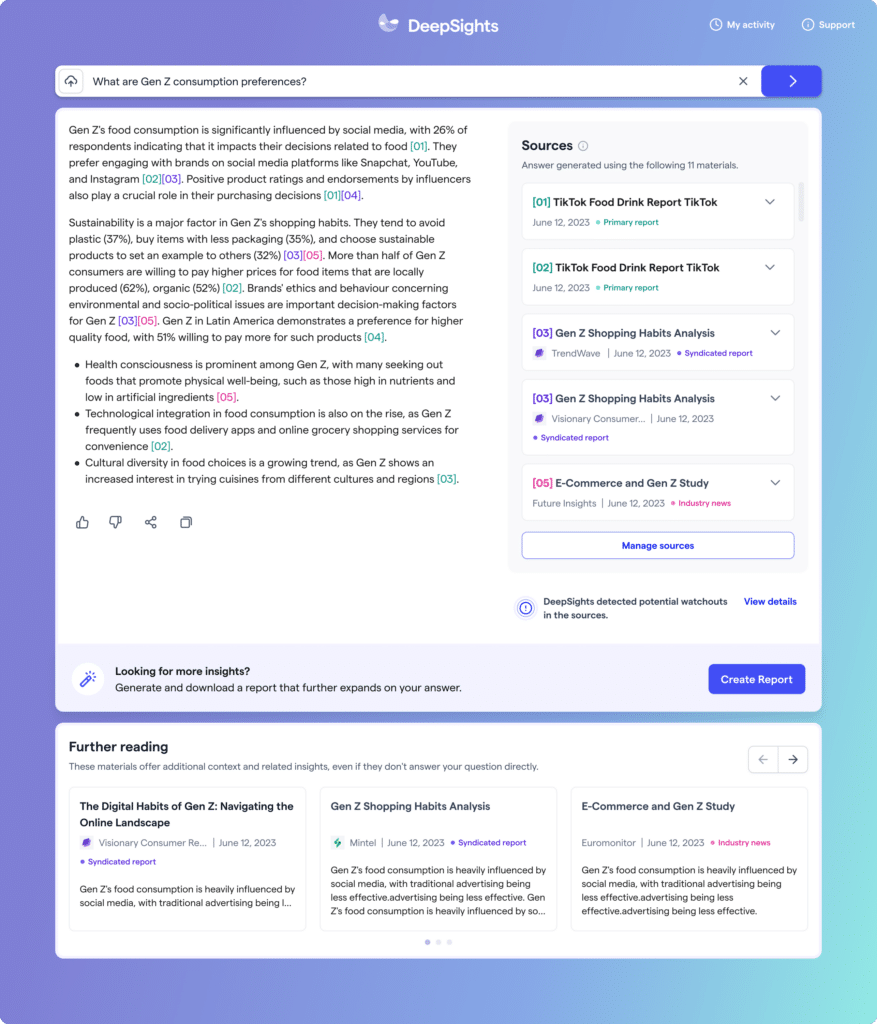

Given the sheer volume of data involved, identifying these imbalances manually is time-consuming and complex. This underscores the necessity of a trusted AI-powered KM solution, like DeepSights™, to automate the detection of such biases within search queries to ensure a balanced representation of all data segments.

DeepSights enables access to all data sources, providing a comprehensive view of your market research and insights. This capacity allows users to examine the breadth of their knowledge base and identify potential gaps or imbalances in representation across different segments.

Inconsistent performance across different demographics

AI bias frequently reveals itself through varying levels of accuracy, comprehensiveness, or relevance when serving different business units or analyzing different market segments. Therefore, it’s necessary to monitor whether your knowledge management system provides equally useful responses regardless of which department is using it or what segments they’re exploring.

Systematic differences in response quality or depth when handling queries related to different business areas often indicate embedded biases in how the system processes natural language processing (NLP) requests or prioritizes knowledge retrieval from your market research database. However, it’s important to differentiate between genuine bias and variations in output due to naturally occurring differences in the amount of available data for different departments or market segments.

DeepSights ensures market intelligence reliability by providing access to a knowledge base sourced entirely from validated information to ensure users are reviewing trustworthy data, minimizing the risk of AI hallucinations and human bias. By leveraging its unique purpose-built RAG, the insights platform dynamically prioritizes and delivers the most relevant market intelligence sources for each inquiry. Deep evidence analysis then confirms the contextual accuracy of retrieved findings, uncovering inconsistencies and potential biases.

Unexpected or unexplained patterns in data

Carefully examine knowledge outputs for correlations or trends that seem unfounded or contradict established understanding from your organization’s market research. Be aware that while these patterns can indicate bias, they might also reveal genuine insights that challenge existing assumptions. Therefore, if your knowledge management system consistently produces results that experts find surprising or questionable, it’s crucial to investigate the system’s processing logic for potential algorithmic biases.

DeepSights connects all knowledge sources, revealing relationships and patterns across disparate data sets, which can help users identify unexpected correlations that might indicate underlying biases. To investigate these patterns, DeepSights allows users to trace data back to its original sources, ensuring validation and contextual accuracy. This empowers experts to not only detect potential biases but also understand their origins and ensure the reliability of their insights.

Practical strategies for mitigating AI bias within a knowledge management system

Mitigating AI bias requires a multifaceted approach that goes beyond simply adding safeguards. It necessitates a foundation built on trusted, purpose-built knowledge management AI technology, specifically designed for market intelligence. Generic RAG-based tools often struggle to deliver truly relevant insights, are overwhelmed by tangentially related information, and lack the refined methods to discern critical insights. Therefore, choosing a purpose-built RAG solution is a crucial starting point for any organization aiming to build equitable knowledge systems. To effectively mitigate bias, organizations should implement proactive strategies across the knowledge management lifecycle.

The following approaches can help you mitigate AI bias when assessing your knowledge systems:

Improving data quality and diversity

To ensure representative data collection from market research, systematically audit primary sources for coverage gaps across market segments and methodologies. Establish protocols for evaluating new data, especially for potential blind spots. Augment data sets with diverse sources, incorporating knowledge from underrepresented areas to balance training data for ML algorithms. Continuously monitor the knowledge base, updating it to reflect current realities and addressing outdated or insufficient information, particularly for emerging market segments.

Gen AI models sometimes produce incorrect, biased, or misleading information (AI hallucinations). Inaccurate AI-generated insights can severely erode trust and lead to poor business decisions resulting in substantial losses. Organizations of any size cannot afford to make decisions based on unreliable information. Deploying AI without rigorous data quality checks and bias mitigation strategies can lead to significant financial and reputational damage, underscoring the critical need for robust validation processes.

DeepSights expands reviewed data and identifies coverage gaps. Its source tracing capability validates underlying information, ensuring accurate and diverse insights that accurately reflect market segments, mitigating biases for a more equitable KM system.

Enhancing algorithm transparency and explainability

To enhance algorithm transparency, prioritize interpretable AI models that clearly demonstrate their reasoning, enabling easier identification of biases in market research interpretation. Document algorithm design and training, maintaining records of data, parameters, and performance to measure bias reduction. Establish clear accountability for AI systems, designating roles for monitoring, investigation, and adjustment to ensure fairness across knowledge management.

DeepSights enhances transparency by delivering comprehensive answers with clear source citations, allowing users to both access the underlying evidence and trace the reasoning behind the conclusions. This traceability aids in understanding how conclusions are reached, facilitating the identification and correction of potential biases in the system’s reasoning. This traceability also provides the evidence-based foundation for teams to justify their insights, so stakeholders can make reliable, substantiated decisions.

Implementing ongoing monitoring and evaluation

To ensure continuous fairness, establish feedback loops that empower users to flag potentially biased outputs. Create accessible mechanisms for stakeholders and teams to report concerns, and develop clear protocols for timely investigation and resolution. Schedule regular reviews and updates of AI systems, assessing performance against fairness metrics and adjusting algorithms to address disparities. Beyond these technical adjustments, cultivate a culture of ethical AI practices by providing training on algorithmic biases, integrating fairness considerations into development, and recognizing teams for proactive bias mitigation.

Building trust in AI-powered KM applications is paramount. DeepSights facilitates ongoing monitoring and evaluation by providing detailed source information within its outputs, enabling easier identification of potential biases and validation of data used in AI processes.

Build a trustworthy future for your KMS with DeepSights

Ensuring you have a reliable KMS requires a core design that prioritizes trusted, purpose-built AI technology for market intelligence and insights generation, coupled with continuous monitoring and rigorous data validation. This means organizations must adopt proactive strategies to ensure fair and unbiased AI systems, recognizing that AI’s effectiveness hinges on the quality of the data it retrieves and uses.

As AI increasingly drives critical decisions, maintaining fair and unbiased systems is essential. This demands ongoing adaptation and organizational alignment, ensuring ethical AI becomes a fundamental business imperative. By leveraging solutions like DeepSights, which features a purpose-built RAG, organizations can ensure transparency and maximize the reliability of their insights, ultimately ensuring the consistent delivery of trusted and reliable knowledge.

DeepSights offers enterprise organizations a trusted solution for managing AI bias through its comprehensive knowledge management capabilities. By providing full visibility into knowledge sources, ensuring transparent citation of information, and enabling connections across disparate data repositories, DeepSights empowers your organization to build and maintain more equitable, accurate knowledge systems that deliver reliable insights. Schedule a demo today to learn more.